It’s pretty hard to talk about technology today without artificial intelligence, or AI, entering into the conversation. It seems to be everywhere… and growing. Businesses are using it to operate more efficiently, it’s resulting in safer and more useful products, and it has the promise of allowing us to personalize our worlds as devices learn our preferences.

The age of AI

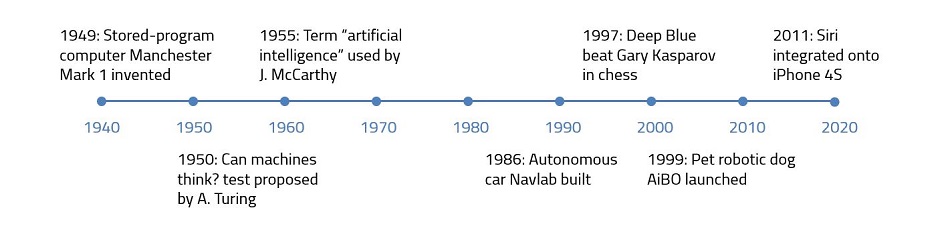

AI refers to the use of machines to simulate human thinking, including learning and problem solving. Scientists have been looking to replicate the brain for a long time, and the term “artificial intelligence” has been around since the mid-20th century. Computers have been around for much longer, at least since the early 19th century. But for most of their history, computers have simply run algorithms – fixed recipes or instruction sets created by their human programmers. In contrast, AI can modify its algorithms and even create new algorithms as it learns, changes, and adapts to its task.

The whole notion of AI stems from the realization that, for all of our technological innovation, our naturally evolved brains can still do many things far better than machines can. This, of course, raises the question about how the brain works – and how we can take that learning and apply it to technology. We call many of our systems “smart,” but are they as intelligent as a human brain?

Machine learning

Perhaps unsurprisingly, it is extraordinarily difficult to create an artificial brain with all the same broad capabilities as a human brain. What we have been more successful with is narrow AI – that is, simulating human intelligence in a single facet or area such as playing board games. A few years ago, DeepMind’s AlphaGo computer program defeated a professional human player in Go, a strategy game that originated in China. Before that game, AlphaGo first played against amateurs and then against itself, accumulating experience and getting smarter over time. When software learns from experience, it is called machine learning. And it turns out that many of the AI applications we use in our everyday lives, including virtual personal assistants such as Siri and Alexa and predictive traffic navigation apps, belong to this subset of AI.

Powered by powerful chips

There are several reasons why the number of AI applications is growing. The applications are extremely compute- and memory-intensive. There are billions of computations that must take place very quickly, and most current implementations require billions of transfers to and from memory to get the job done. Only recently have we had the kind of horsepower needed to attack these problems the way we are today. We can thank the continued evolution of semiconductors for the explosion in capabilities that make this possible.

The current approach requires huge numbers of examples from which machines can learn. The internet puts countless examples at our fingertips. Also, data center platforms are being designed specifically to enable machine learning business applications such as customer support and fraud detection. Without recent advances in data storage, things would be much more difficult.

AI in our everyday lives

For all the buzz about AI, we’re only just starting to see what it can do. Probably the biggest area where we interact with AI is through so-called “predictive analytics.” You see this when Amazon suggests other books you might like, or when Netflix predicts which movies you may like to watch next.

Facial recognition is another application in widespread use today. In addition to unlocking smartphones, this technology is being used in building security, photo tagging, and online eyewear shopping.

AI is also being applied in many areas that we don’t see, such as credit card fraud detection and mortgage loan approval, and more applications are on their way. We’re excited to see how AI will continue to transform industries injecting previously unimagined opportunities into our everyday lives.